13. Cloud Logging and Monitoring for BigQuery

2024. 11. 21. 08:16ㆍ클라우드/GCP

PART 1: Analyze Cloud Logging logs

Task 1: Open BigQuery

Task 2: Create a dataset

| SELECT current_date |

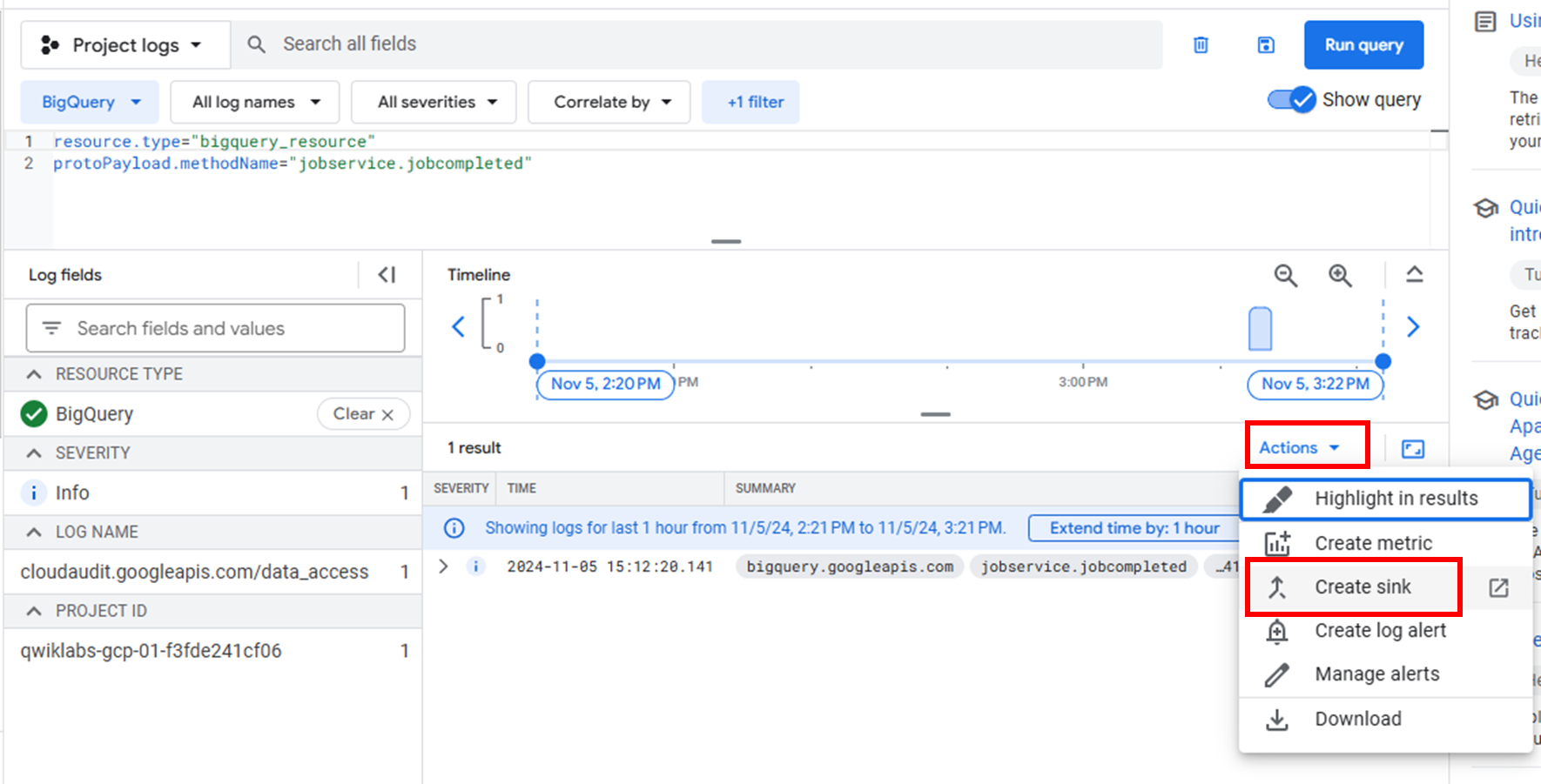

Task 4: Set up log export from Cloud Logging

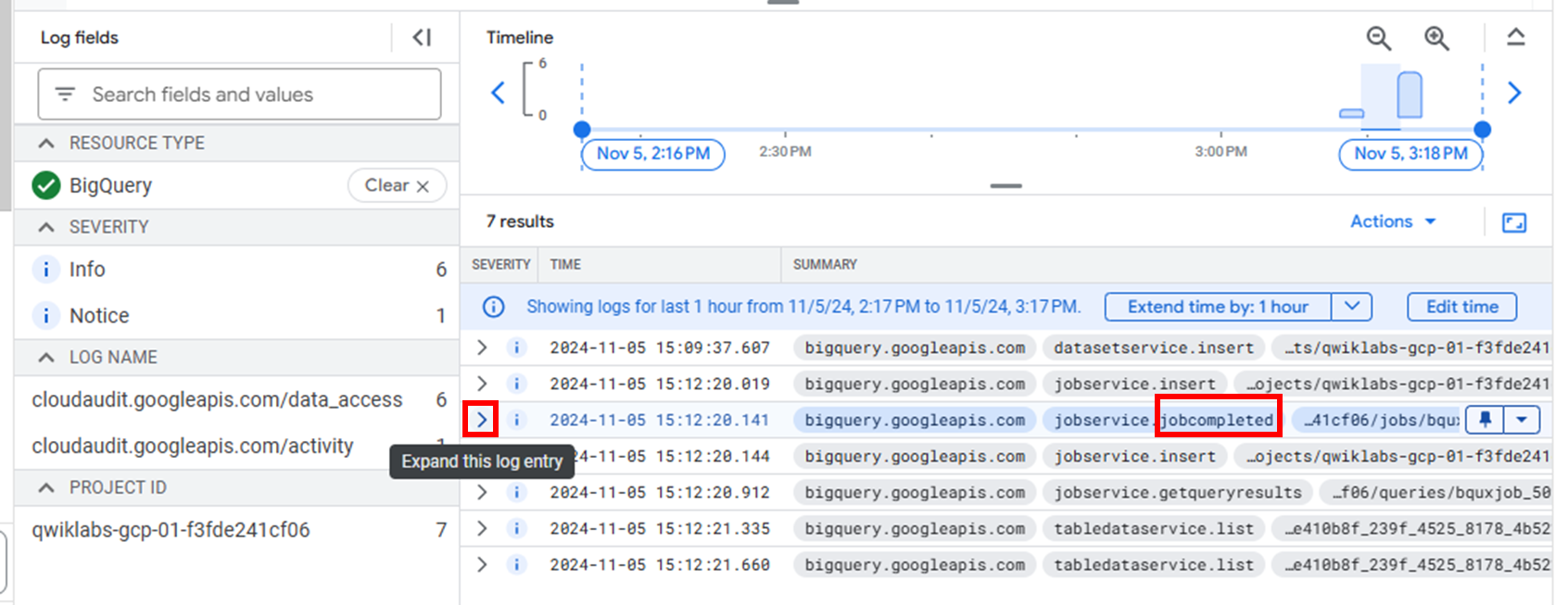

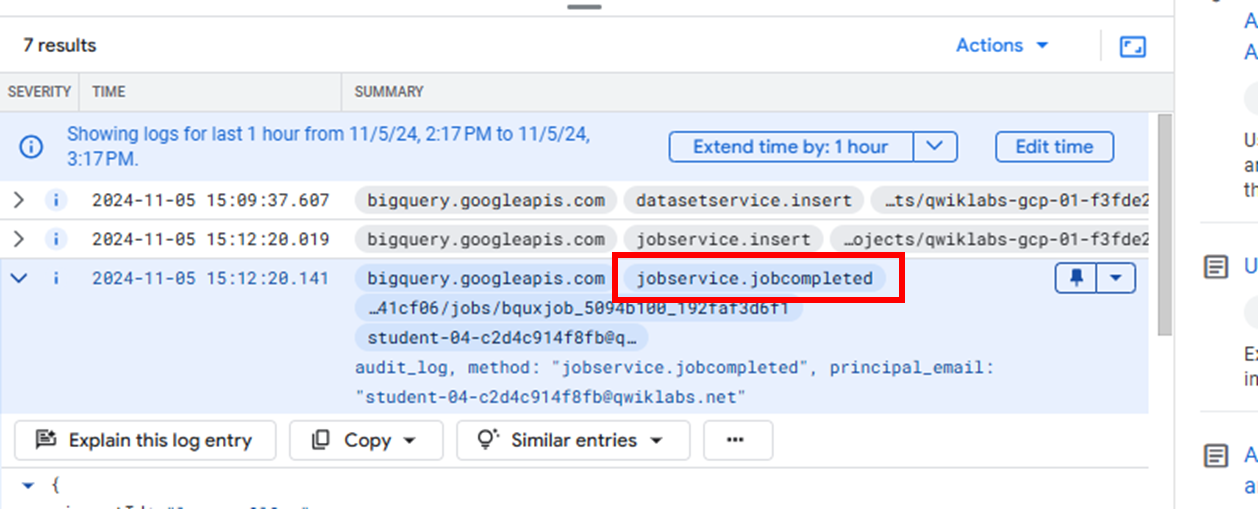

Look for the entry that contains the word "jobcompleted".

Create Sink

Task 5: Run example queries

| bq query --location=us --use_legacy_sql=false --use_cache=false \ 'SELECT fullName, AVG(CL.numberOfYears) avgyears FROM `qwiklabs-resources.qlbqsamples.persons_living`, UNNEST(citiesLived) as CL GROUP BY fullname' |

| bq query --location=us --use_legacy_sql=false --use_cache=false \ 'select month, avg(mean_temp) as avgtemp from `qwiklabs-resources.qlweather_geo.gsod` where station_number = 947680 and year = 2010 group by month order by month' |

| bq query --location=us --use_legacy_sql=false --use_cache=false \ 'select CONCAT(departure_airport, "-", arrival_airport) as route, count(*) as numberflights from `bigquery-samples.airline_ontime_data.airline_id_codes` ac, `qwiklabs-resources.qlairline_ontime_data.flights` fl where ac.code = fl.airline_code and regexp_contains(ac.airline , r"Alaska") group by 1 order by 2 desc LIMIT 10' |

Task 6: Viewing the logs in BigQuery

| CREATE OR REPLACE VIEW bq_logs.v_querylogs AS SELECT resource.labels.project_id, protopayload_auditlog.authenticationInfo.principalEmail, protopayload_auditlog.servicedata_v1_bigquery.jobCompletedEvent.job.jobConfiguration.query.query, protopayload_auditlog.servicedata_v1_bigquery.jobCompletedEvent.job.jobConfiguration.query.statementType, protopayload_auditlog.servicedata_v1_bigquery.jobCompletedEvent.job.jobStatus.error.message, protopayload_auditlog.servicedata_v1_bigquery.jobCompletedEvent.job.jobStatistics.startTime, protopayload_auditlog.servicedata_v1_bigquery.jobCompletedEvent.job.jobStatistics.endTime, TIMESTAMP_DIFF(protopayload_auditlog.servicedata_v1_bigquery.jobCompletedEvent.job.jobStatistics.endTime, protopayload_auditlog.servicedata_v1_bigquery.jobCompletedEvent.job.jobStatistics.startTime, MILLISECOND)/1000 AS run_seconds, protopayload_auditlog.servicedata_v1_bigquery.jobCompletedEvent.job.jobStatistics.totalProcessedBytes, protopayload_auditlog.servicedata_v1_bigquery.jobCompletedEvent.job.jobStatistics.totalSlotMs, ARRAY(SELECT as STRUCT datasetid, tableId FROM UNNEST(protopayload_auditlog.servicedata_v1_bigquery.jobCompletedEvent.job.jobStatistics.referencedTables)) as tables_ref, protopayload_auditlog.servicedata_v1_bigquery.jobCompletedEvent.job.jobStatistics.totalTablesProcessed, protopayload_auditlog.servicedata_v1_bigquery.jobCompletedEvent.job.jobStatistics.queryOutputRowCount, severity FROM `<YOUR-PROJECT-ID>.bq_logs.cloudaudit_googleapis_com_data_access_*` ORDER BY startTime |

| SELECT * FROM bq_logs.v_querylogs |

PART 2: Cloud Monitoring for BigQuery

| #standardSQL CREATE SCHEMA log_mon OPTIONS( location="US" ); |

| #standardSQL CREATE OR REPLACE TABLE log_mon.base_table AS ( SELECT * FROM `bigquery-public-data.wikipedia.pageviews_2021` WHERE DATE(datehour) >= DATE(2021,11,1)); |

| #standardSQL SELECT COUNT(views) AS views_to_G_pages FROM log_mon.base_table WHERE DATE(datehour) >= DATE(2021,12,1) AND title LIKE 'G%' |

Task 2: Use the BigQuery Dashboard

Task 3: Import the BigQuery Query Monitoring Dashboard

BigQuery는 스캔된 데이터의 양, 저장된 데이터 크기, 스트리밍 삽입 및 쿼리 실행과 같은 사용량에 따라 비용을 산정한다는데 솔직히 잘 모르겠다.

내가 데이터셋의 모든 테이블을 조회하면 그만큼 금액이 나오는건지??

참고 : https://youtu.be/hlRZbviWpOs?si=akwigDIzi-YXoGNb

반응형

'클라우드 > GCP' 카테고리의 다른 글

| 15. Migrate to Cloud SQL for PostgreSQL using Database Migration Service (0) | 2024.11.23 |

|---|---|

| 14. Cloud Composer: Copying BigQuery Tables Across Different Locations (0) | 2024.11.22 |

| 그림으로 배우는 구글 클라우드 101 (2) | 2024.11.20 |

| 12. BigQuery Soccer Data Analytical Insight (0) | 2024.11.20 |

| 11. Setting Up Network and HTTP Load Balancers [ACE] (0) | 2024.11.19 |